Intro to Machine Learning

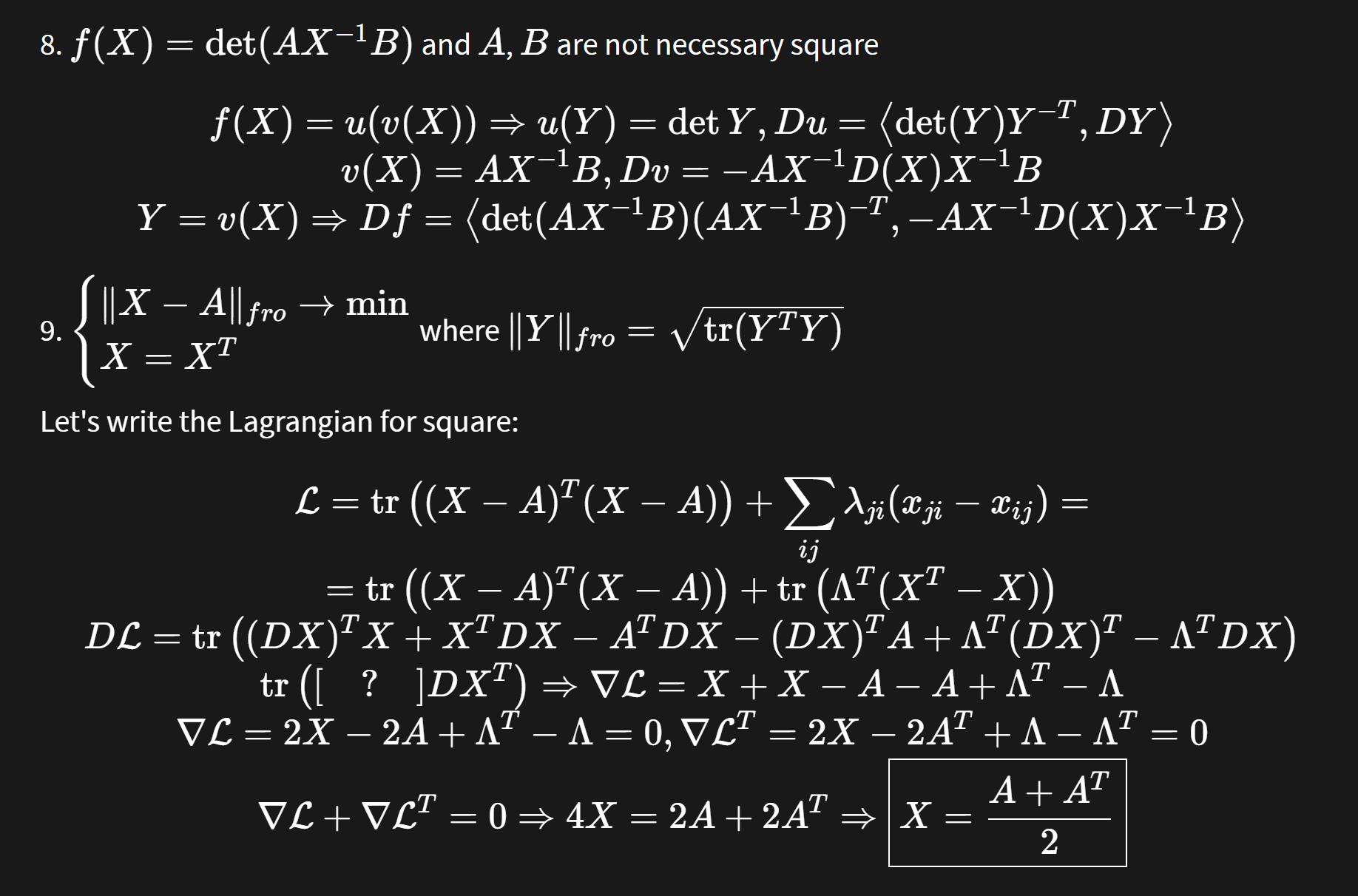

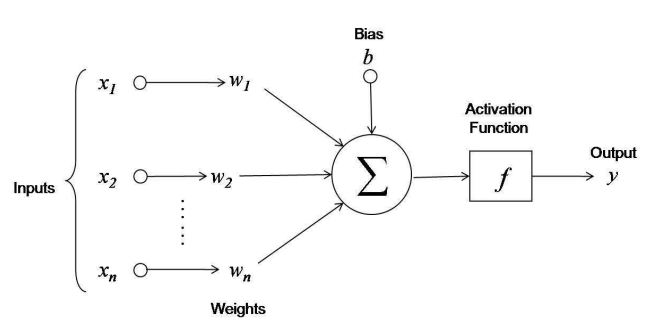

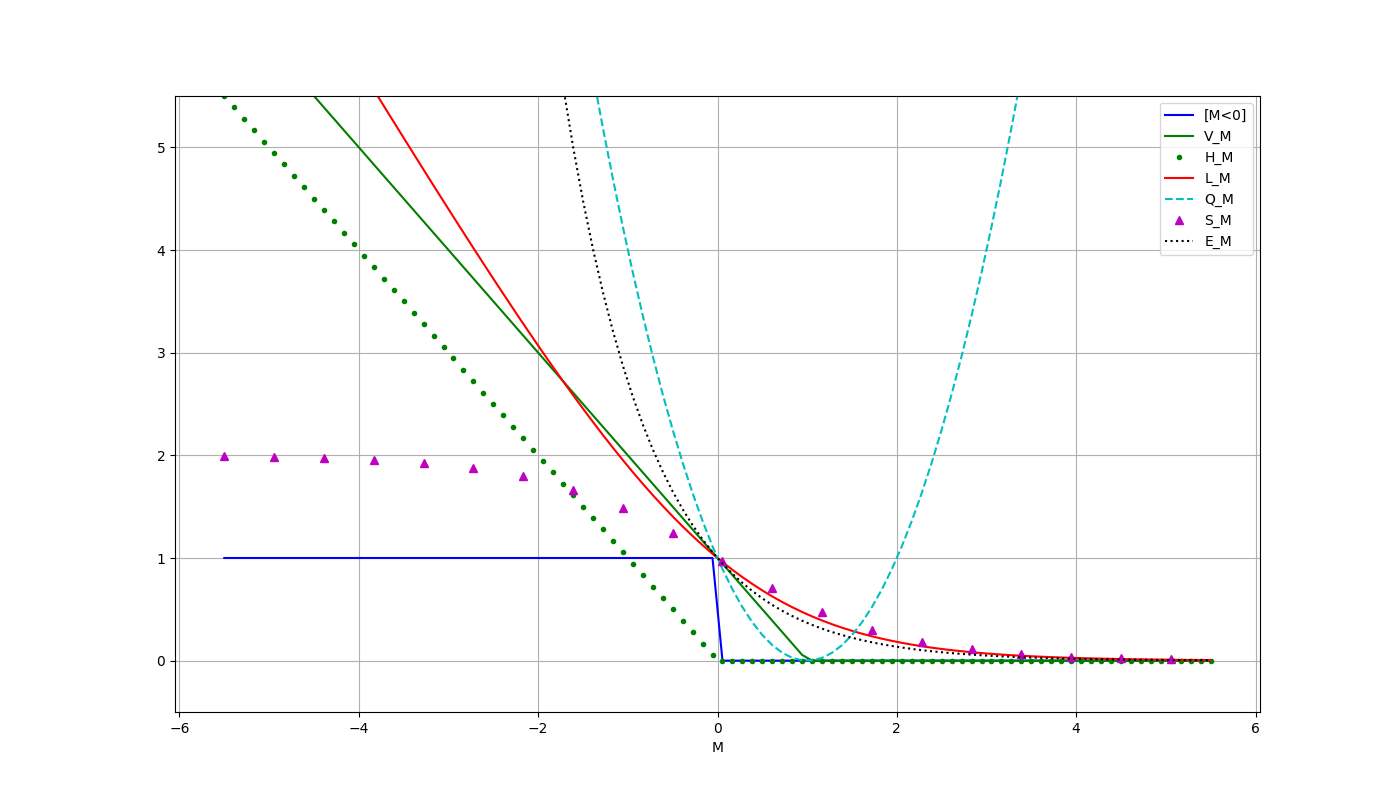

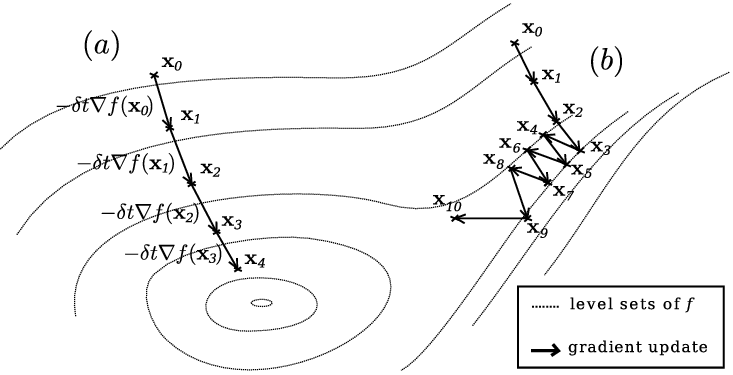

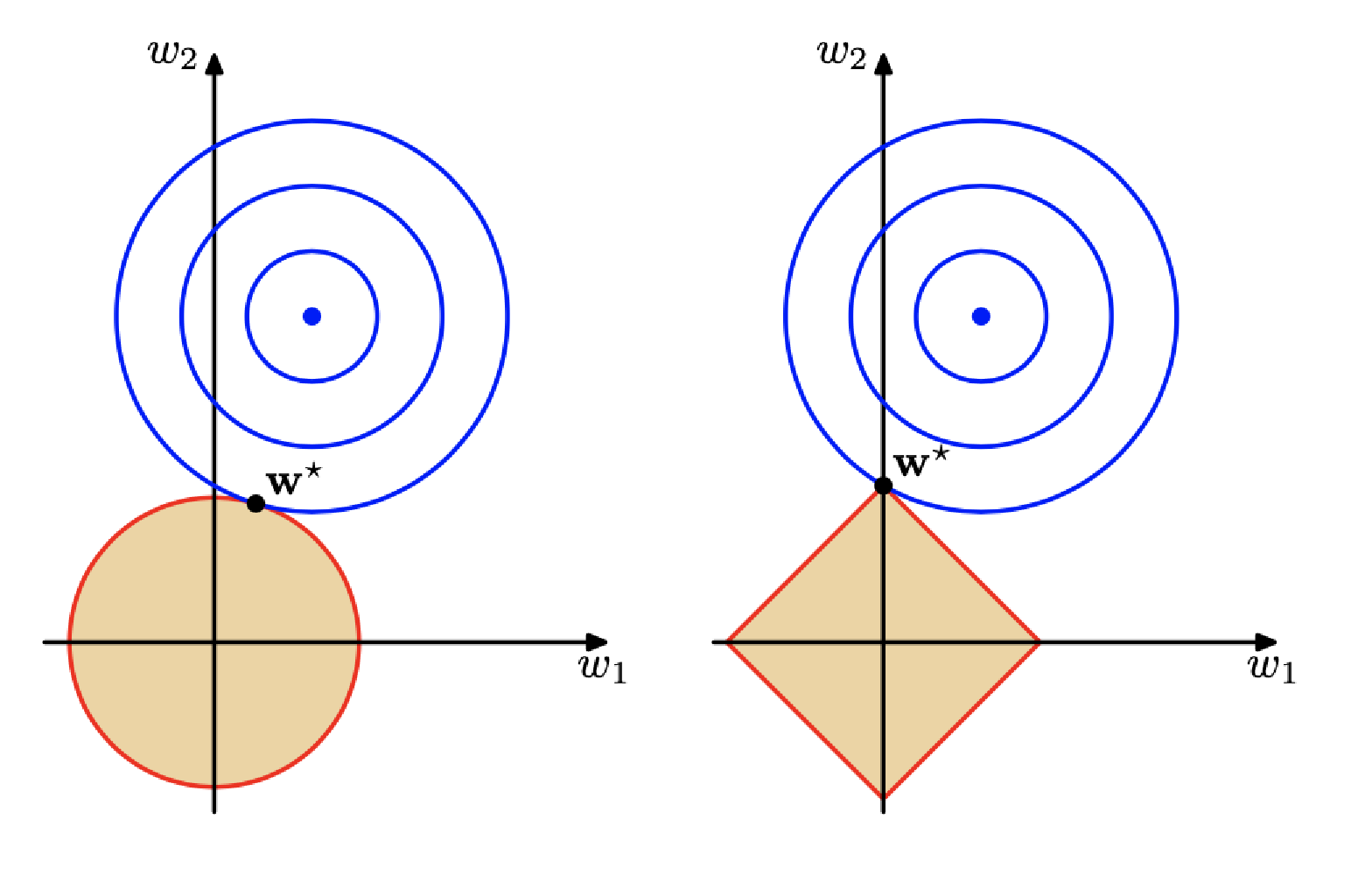

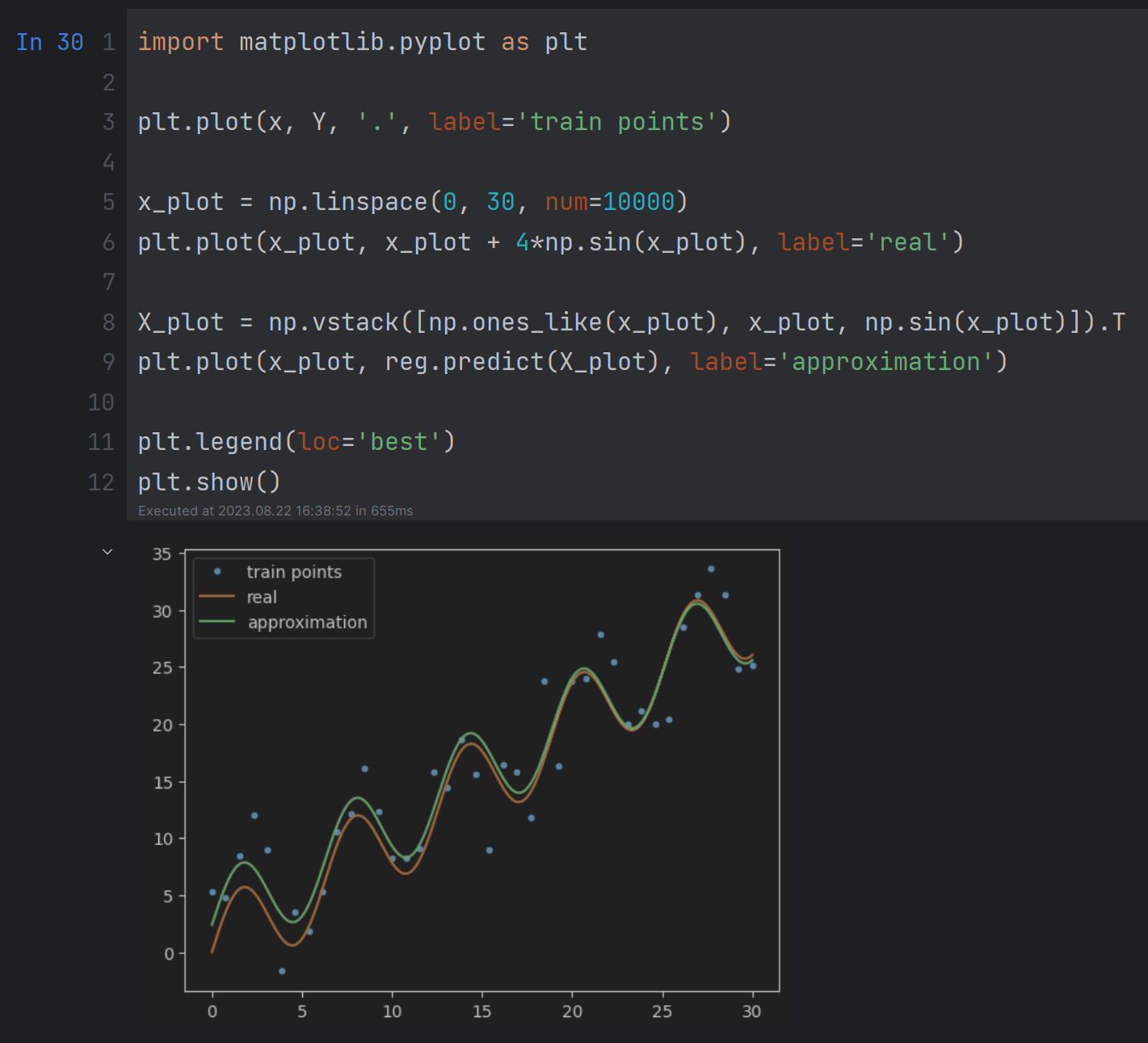

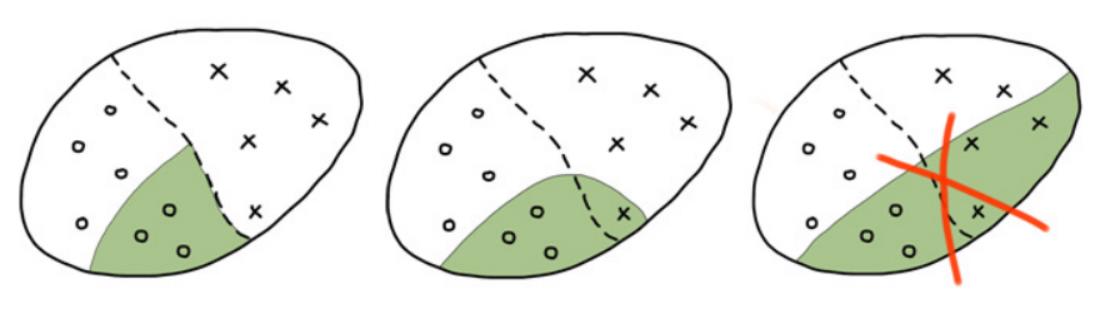

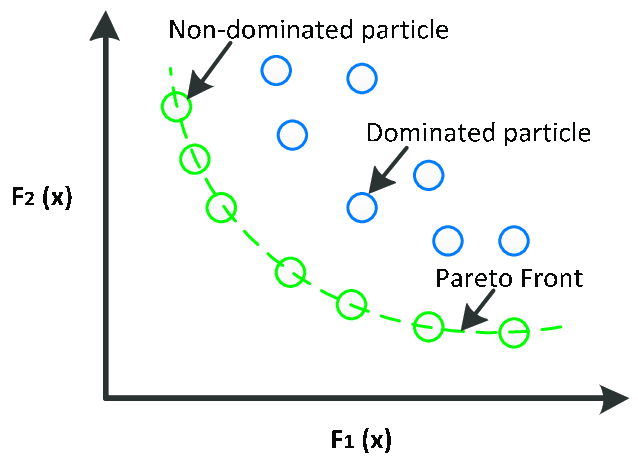

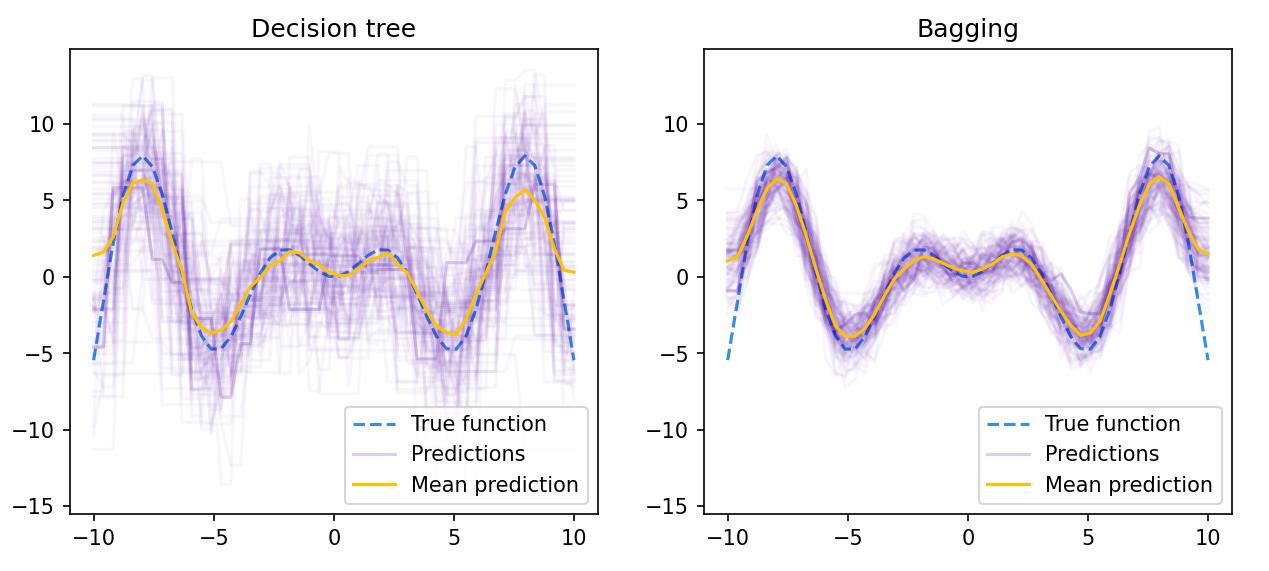

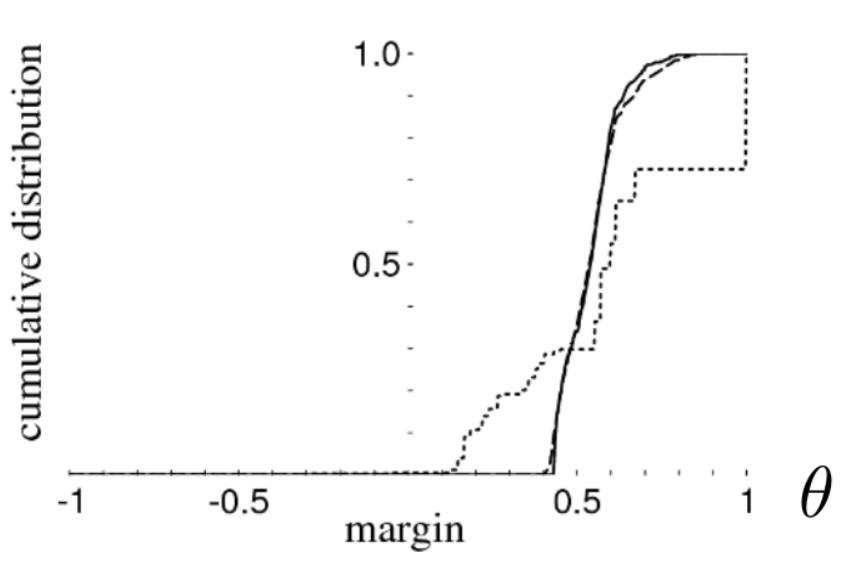

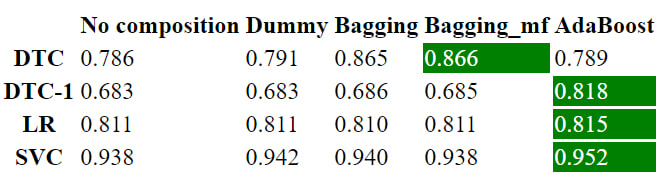

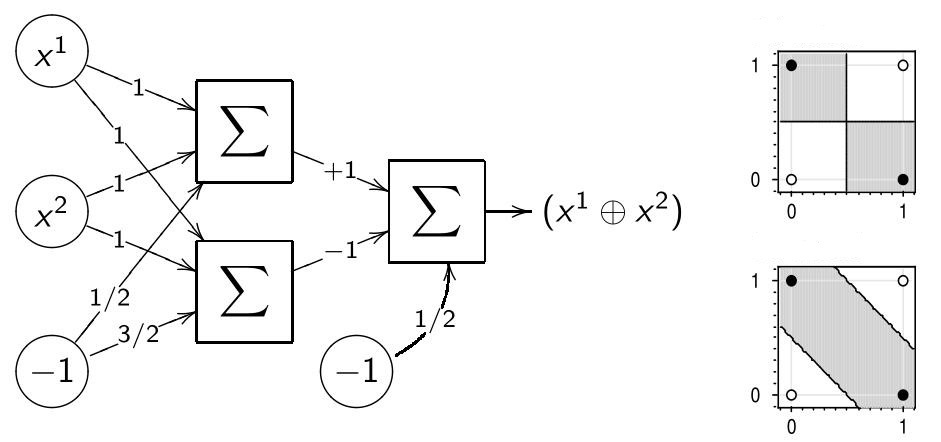

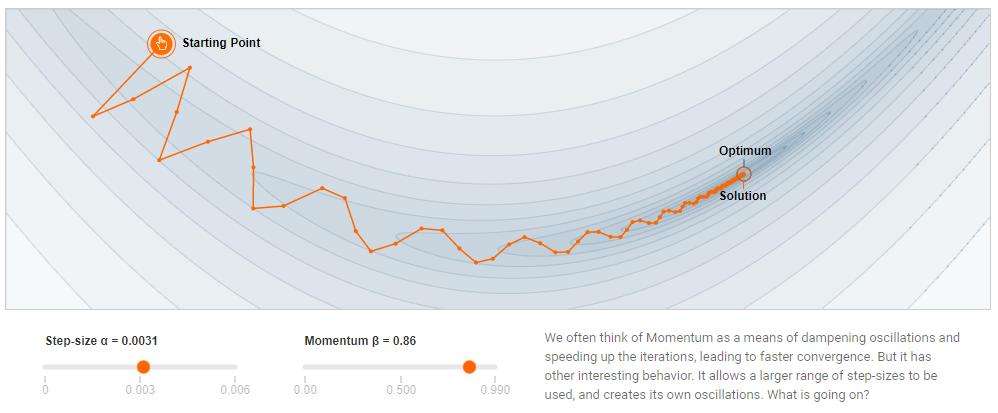

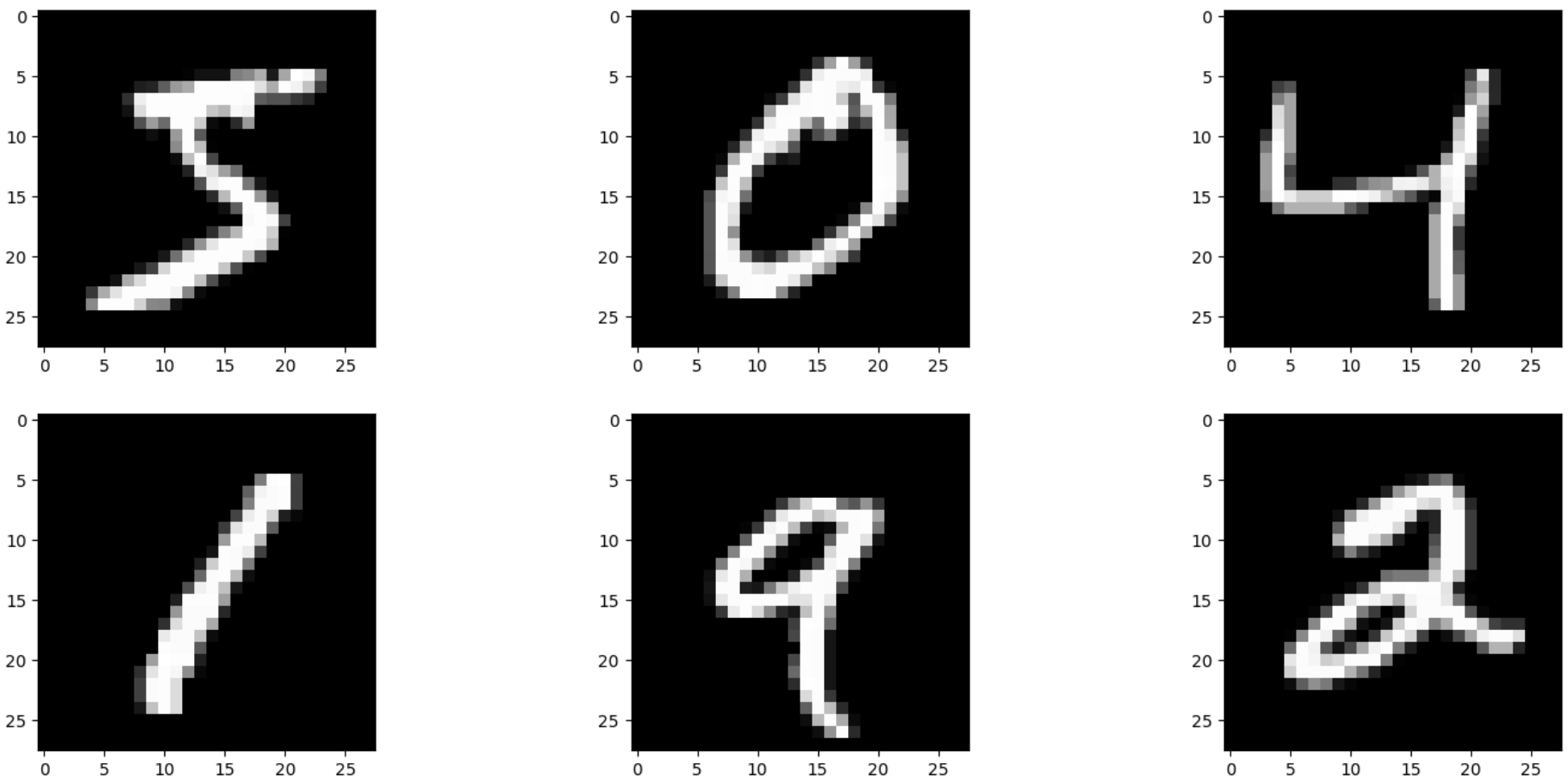

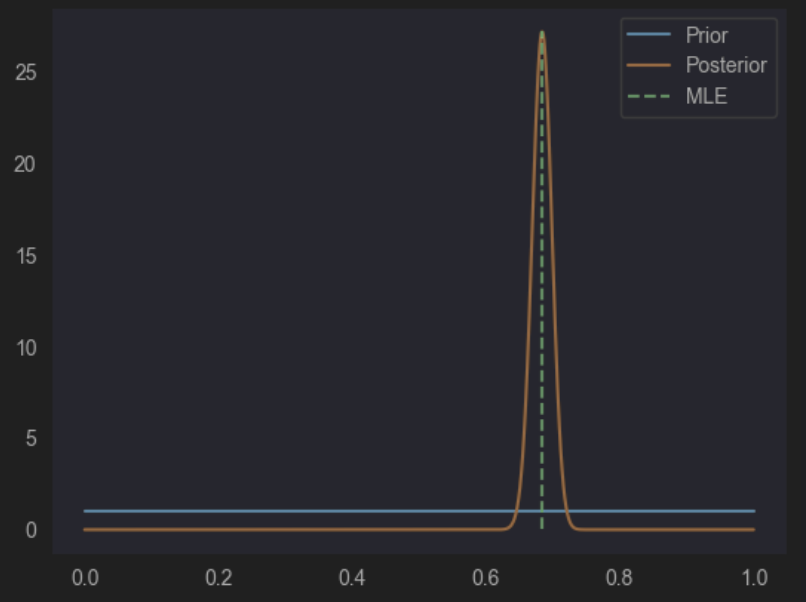

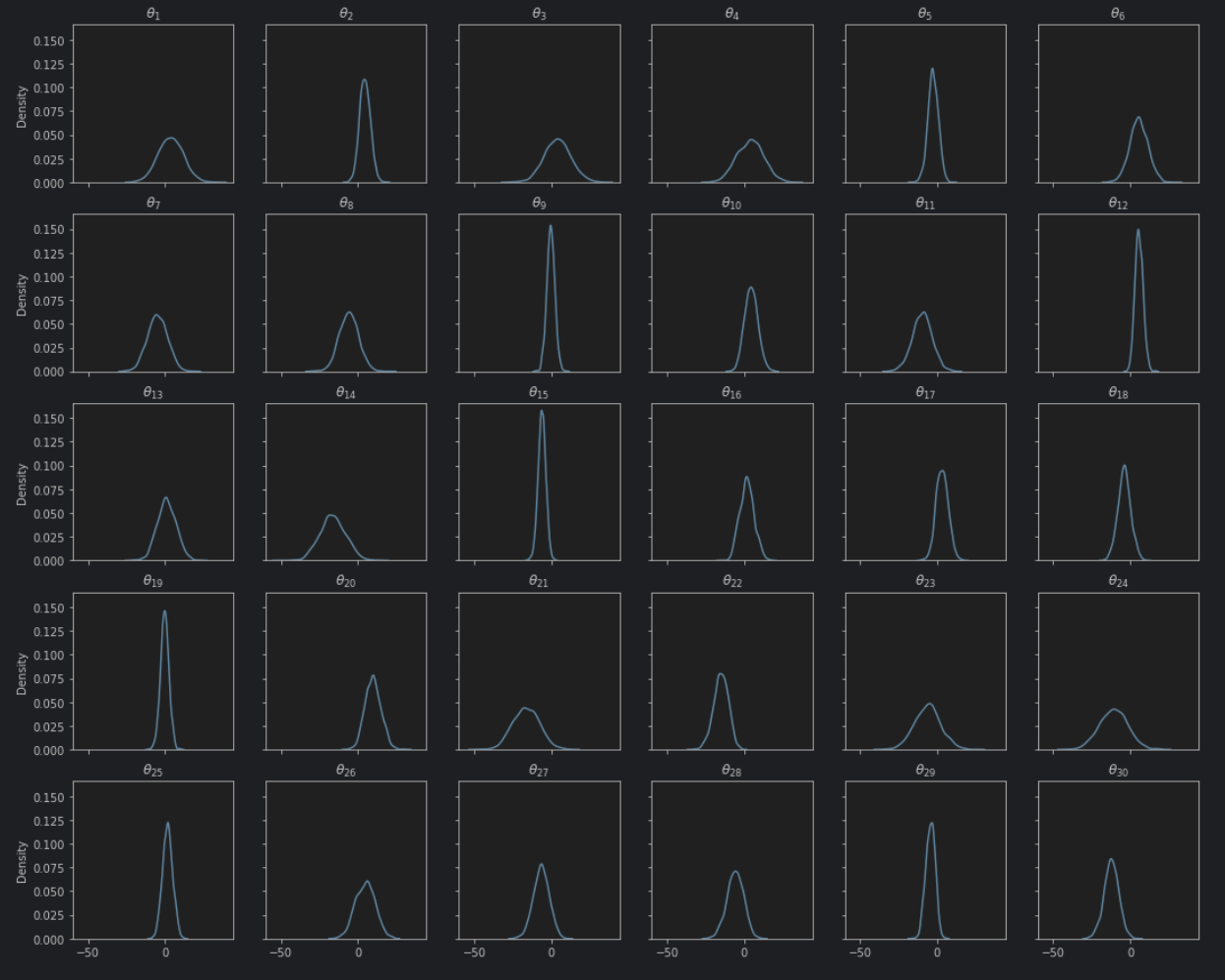

- Main concepts of machine learning: learning from precedents (supervised), objects, features, answers, model algorithms, learning method, empirical risk, overfitting

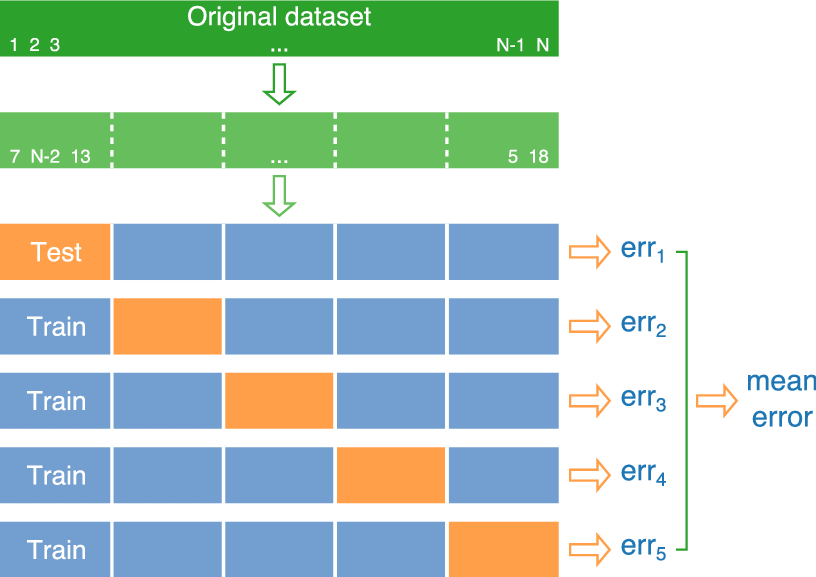

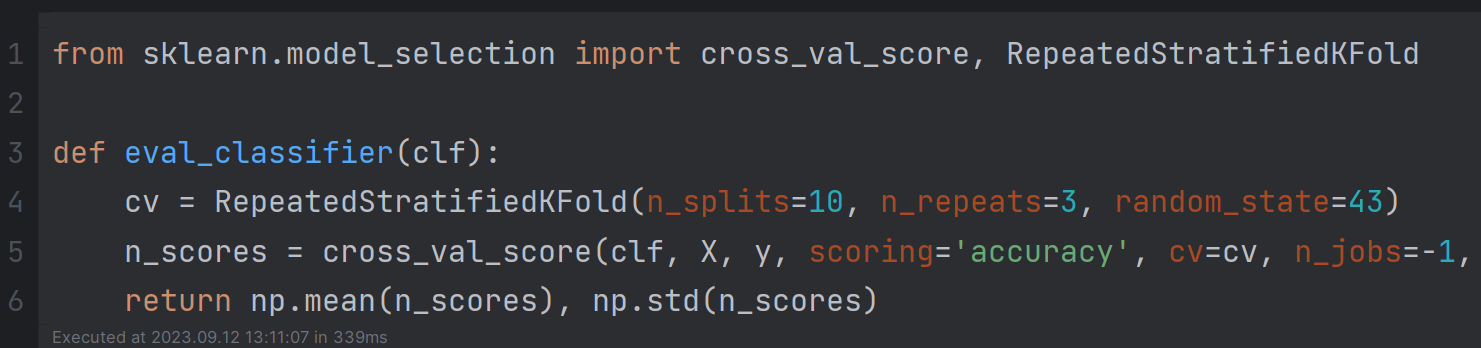

- Overfitting prevention: HoldOut, LeaveOneOut, CrossValidation

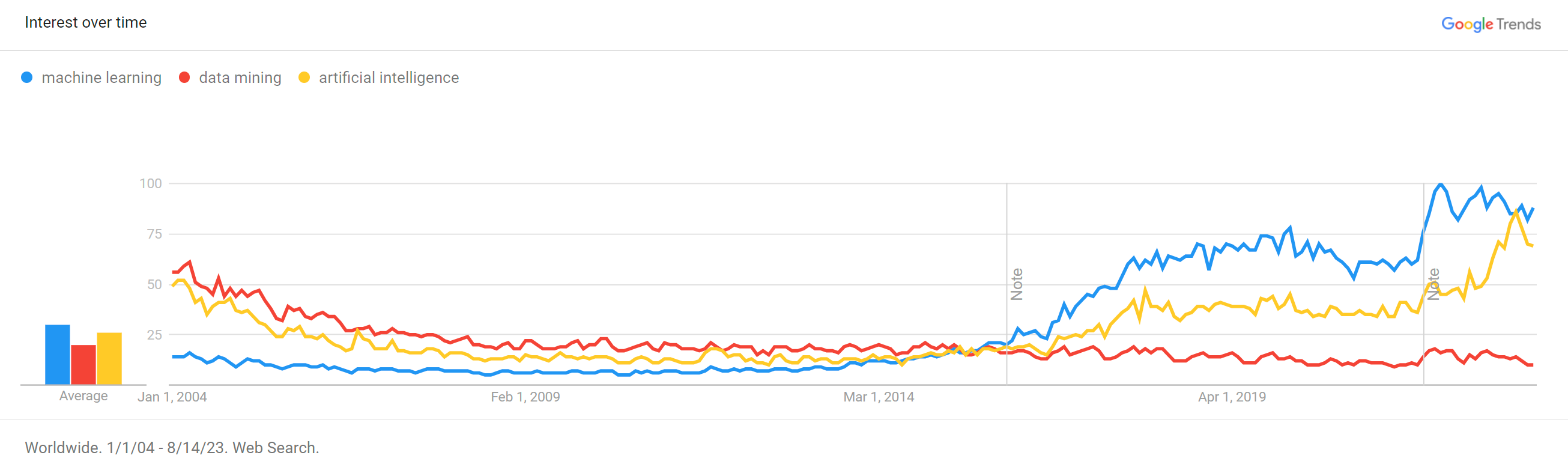

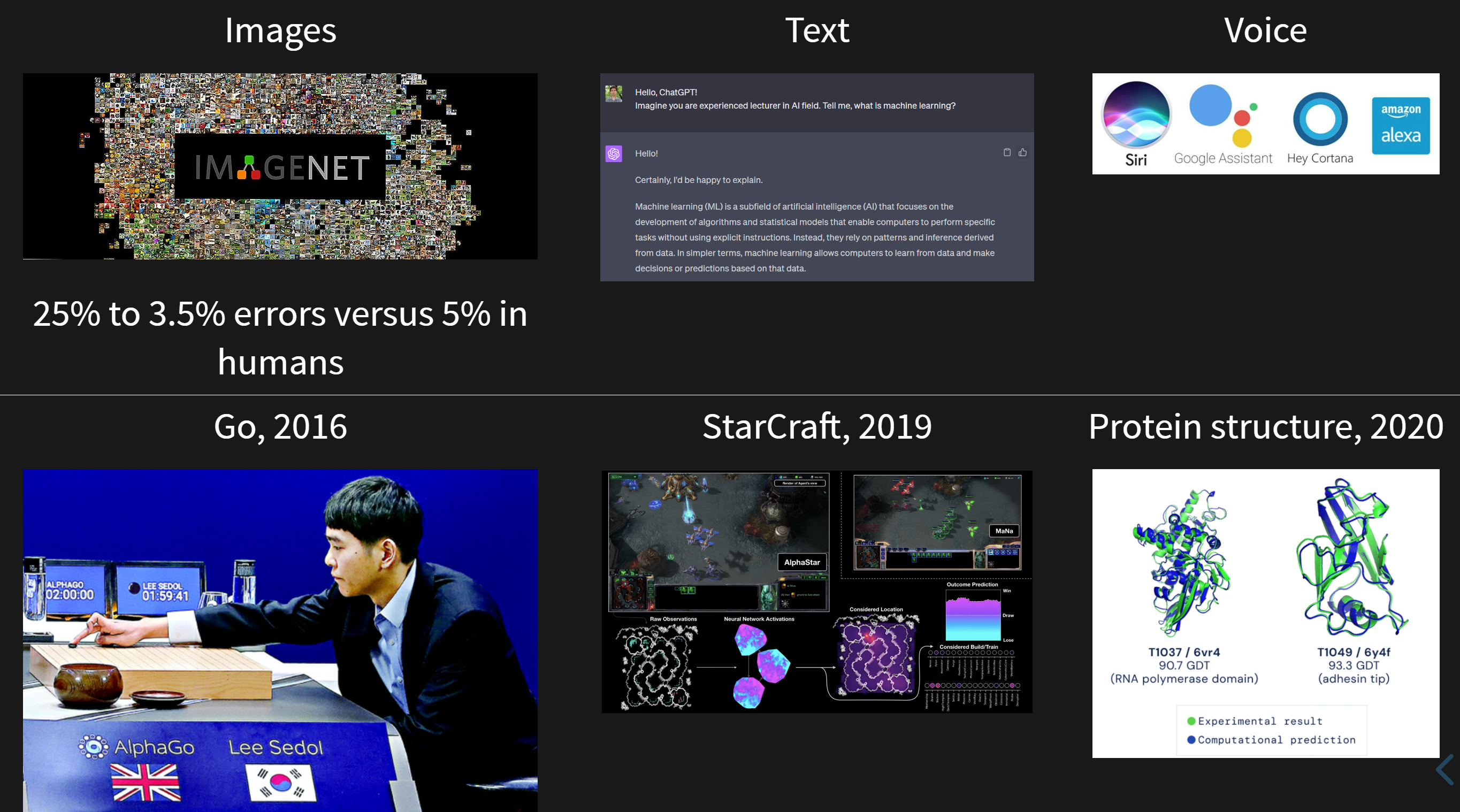

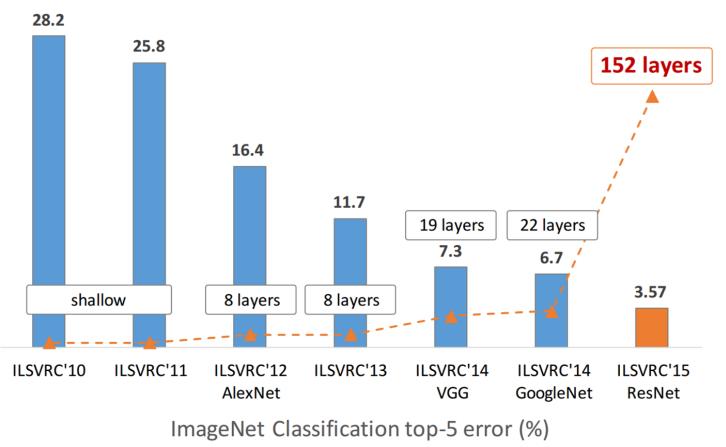

- Chronology of significant events in machine learning